# 使用etcd-carry同步K8s集群资源到备用集群

# 简介

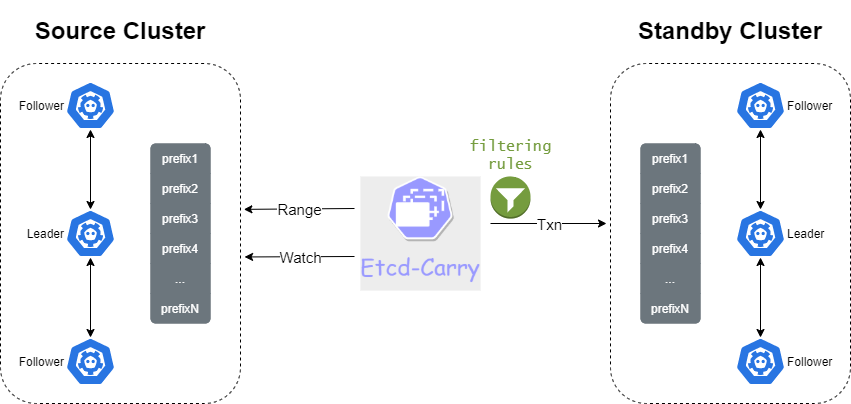

etcd-carry (opens new window)是由GO语言编写的一款实时同步工具,用户可以自定义同步规则,etcd-carry将符合规则的K8s集群资源实时同步到备用K8s集群。

通过etcd-carry同步主k8s集群的数据到备k8s集群etcd,方便主k8s集群出问题时快速切到备k8s集群。

etcd-carry可以通过规则指定K8s集群资源的同步顺序,并且支持按命名空间、标签选择器、资源specification中的字段、GVK等多种同步规则。

# 原理

自定义同步规则后,通过etcd range读接口从主集群遍历K8s集群资源,并将符合规则的K8s集群资源写入目的etcd,此过程相当于一次全量同步。

随后通过 etcd watch 接口指定range读接口返回的revision,监听从此revision后的所有变更事件。当主etcd中的K8s资源发生任何key-value变更时,etcd-carry会收到相应事件通知,会实时将符合规则的数据写入备用etcd集群,该同步延迟是非常低的。

若未指定同步规则,etcd-carry不会将主etcd集群中的任何数据同步到备用集群。当etcd-carry与主备集群任何一方发生网络中断时,同步流程将会暂停,直到网络连接恢复正常。

etcd-carry的同步是单向的,备用etcd集群上的任何变更都不会通过etcd-carry同步到主集群。目前只支持K8s集群资源的同步,但不支持Service资源的正确同步,不支持同步有状态服务在PV中的数据。

# 编译安装

下载源码

$ git clone https://github.com/etcd-carry/etcd-carry.git

编译

$ cd etcd-carry

$ make

2

运行参数

$ ./bin/etcd-carry --help

A simple command line for etcd mirroring

Usage:

etcd-carry [flags]

Generic flags:

--debug enable client-side debug logging

--mirror-rule string Specify the rules to start mirroring (default "/etc/mirror/rules.yaml")

Etcd flags:

--encryption-provider-config string The file containing configuration for encryption providers to be used for storing secrets in etcd (default "/etc/mirror/secrets-encryption.yaml")

--kube-prefix string the prefix to all kubernetes resources passed to etcd (default "/registry")

--max-txn-ops uint Maximum number of operations permitted in a transaction during syncing updates (default 128)

--rev int Specify the kv revision to start to mirror

Transport flags:

--dest-cacert string Verify certificates of TLS enabled secure servers using this CA bundle for the destination cluster (default "/etc/kubernetes/dest/etcd/ca.crt")

--dest-cert string Identify secure client using this TLS certificate file for the destination cluster (default "/etc/kubernetes/dest/etcd/server.crt")

--dest-endpoints strings List of etcd servers to connect with (scheme://ip:port) for the destination cluster, comma separated

--dest-insecure-skip-tls-verify skip server certificate verification (CAUTION: this option should be enabled only for testing purposes)

--dest-insecure-transport Disable transport security for client connections for the destination cluster (default true)

--dest-key string Identify secure client using this TLS key file for the destination cluster (default "/etc/kubernetes/dest/etcd/server.key")

--dial-timeout duration dial timeout for client connections (default 2s)

--keepalive-time duration keepalive time for client connections (default 2s)

--keepalive-timeout duration keepalive timeout for client connections (default 6s)

--source-cacert string verify certificates of TLS-enabled secure servers using this CA bundle (default "/etc/kubernetes/source/etcd/ca.crt")

--source-cert string identify secure client using this TLS certificate file (default "/etc/kubernetes/source/etcd/server.crt")

--source-endpoints strings List of etcd servers to connect with (scheme://ip:port), comma separated

--source-insecure-skip-tls-verify skip server certificate verification (CAUTION: this option should be enabled only for testing purposes)

--source-insecure-transport disable transport security for client connections (default true)

--source-key string identify secure client using this TLS key file (default "/etc/kubernetes/source/etcd/server.key")

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

# 测试

etcd-carry项目的deploy/examples (opens new window)目录下有示例,通过示例来演示同步效果。

rules.yaml (opens new window)文件里是同步规则:

filters:

sequential:

- group: apiextensions.k8s.io

resources:

- group: apiextensions.k8s.io

version: v1beta1

kind: CustomResourceDefinition

- group: ""

resources:

- version: v1

kind: Namespace

labelSelectors:

- matchExpressions:

- key: test.io/namespace-kind

operator: In

values:

- unique

- unit-test

secondary:

- group: ""

resources:

- version: v1

Kind: Secret

namespaceSelectors:

- matchExpressions:

- key: test.io/namespace-kind

operator: In

values:

- unique

fieldSelectors:

- matchExpressions:

- key: type

operator: NotIn

values:

- kubernetes.io/service-account-token

excludes:

- resource:

version: v1

kind: Secret

name: exclude-me-secret

namespace: unique

- group: ""

resources:

- version: v1

kind: ConfigMap

namespace: unit-test

labelSelectors:

- matchExpressions:

- key: test.io/namespace-kind

operator: Exists

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

filters.sequential配置的是需要优先按顺序同步的K8s资源。按照规则,这部分的资源会被优先同步:

- 首先,同步主K8s集群中所有的CRD资源到备集群;

- 其次,同步带有

test.io/namespace-kind:unique或test.io/namespace-kind:unit-test标签的Namespace资源。

filters.secondary配置的是无优先级要求的资源,这部分资源只有等filters.sequential全部同步完成后才开始进行。按照规则,会有以下资源将会被同步:

type不为kubernetes.io/service-account-token,且所属的namespace必须带有test.io/namespace-kind:unique标签的所有Secret资源,unique命名空间下名为exclude-me-secret的Secret资源除外;unit-test命名空间下带有test.io/namespace-kind标签的ConfigMap资源。

secrets-encryption.yaml (opens new window)文件是kube-apiserver组件对需要加密存储的资源的加密信息,由kube-apiserver的--encryption-provider-config启动参数指定。

比如,我是在备K8s集群的master节点上运行etcd-carry,首先将主集群etcd组件的证书密钥拷贝到本地/etc/etcd-carry/source/目录下,命令如下:

$ ./bin/etcd-carry --master-cacert=/etc/etcd-carry/source/ca.crt --master-cert=/etc/etcd-carry/source/server.crt --master-key=/etc/etcd-carry/source/server.key --master-endpoints=10.20.144.29:2379 --slave-cacert=/etc/kubernetes/pki/etcd/ca.crt --slave-cert=/etc/kubernetes/pki/etcd/server.crt --slave-key=/etc/kubernetes/pki/etcd/server.key --slave-endpoints=192.168.180.130:2379 --encryption-provider-config=./deploy/examples/secrets-encryption.yaml --mirror-rule=./deploy/examples/rules.yaml

probe ok!

context is stopping...

start to replay...

watch is stopping...

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: bgpconfigurations.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: bgppeers.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: blockaffinities.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: clusterinformations.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: felixconfigurations.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: globalnetworkpolicies.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: globalnetworksets.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: hostendpoints.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: ipamblocks.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: ipamconfigs.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: ipamhandles.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: ippools.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: kubecontrollersconfigurations.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: networkpolicies.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: networksets.crd.projectcalico.org Namespace: Selector: map[]

[Matched Sequential]: GVK: apiextensions.k8s.io/v1beta1, Kind=CustomResourceDefinition Name: servicemonitors.monitoring.coreos.com Namespace: Selector: map[]

Key: /registry/apiextensions.k8s.io/customresourcedefinitions/, Matched: 16, Filtered: 16

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/bgpconfigurations.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/bgppeers.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/blockaffinities.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/clusterinformations.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/felixconfigurations.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/globalnetworkpolicies.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/globalnetworksets.crd.projectcalico.org

Key: /registry/namespaces/, Matched: 19, Filtered: 0

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/hostendpoints.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/ipamblocks.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/ipamconfigs.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/ipamhandles.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/ippools.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/kubecontrollersconfigurations.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/networkpolicies.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/networksets.crd.projectcalico.org

PUT /registry/apiextensions.k8s.io/customresourcedefinitions/servicemonitors.monitoring.coreos.com

Key: /registry/, Matched: 496, Filtered: 0

No more keys matched: /registry/ 496

Start to watch updates from revision 3852180

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

从输出的调试信息中可以看到,此时以及将将主K8s集群上的所有CRD同步到备集群了。

kube (opens new window)目录下面是待同步的测试资源,在主K8s集群的master节点上通过kubectl apply命令来创建待同步的测试资源对象。

$ kubectl apply -f deploy/examples/kube/

configmap/influxdb1 created

configmap/influxdb2 created

namespace/unique unchanged

namespace/unit-test unchanged

secret/influxdb unchanged

secret/mysql unchanged

secret/exclude-me-secret unchanged

$ kubectl get cm -nunique

NAME DATA AGE

influxdb2 1 2m47s

$ kubectl get secret -nunique

NAME TYPE DATA AGE

default-token-rf5xl kubernetes.io/service-account-token 3 2m57s

exclude-me-secret Opaque 2 2m57s

influxdb Opaque 2 2m57s

$ kubectl get cm -nunit-test

NAME DATA AGE

influxdb1 1 3m

$ kubectl get secret -nunit-test

NAME TYPE DATA AGE

default-token-lvkwj kubernetes.io/service-account-token 3 3m11s

mysql Opaque 2 3m11s

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

此时,从etcd-carry输出的调试信息中可以看到有资源同步过来了:

[Matched Sequential]: GVK: /v1, Kind=Namespace Name: unique Namespace: Selector: map[test.io/namespace-kind:unique]

PUT /registry/namespaces/unique

[Matched Sequential]: GVK: /v1, Kind=Namespace Name: unit-test Namespace: Selector: map[test.io/namespace-kind:unit-test]

PUT /registry/namespaces/unit-test

[Matched Object]: GVK: /v1, Kind=ConfigMap Name: influxdb1 Namespace: unit-test Selector: map[test.io/namespace-kind:unit]

[Matched Rule]: Group: Resource: [/v1, Kind=ConfigMap] Namespace: unit-test Selector: [{map[] [{test.io/namespace-kind Exists []}]}] Exclude: [] Field: []

PUT /registry/configmaps/unit-test/influxdb1

[Matched Object]: GVK: /v1, Kind=Secret Name: influxdb Namespace: unique Selector: map[]

[Matched Rule]: Group: Resource: [/v1, Kind=Secret] Namespace: Selector: [] Exclude: [{/v1, Kind=Secret exclude-me-secret unique []}] Field: [{map[] [{type NotIn [kubernetes.io/service-account-token]}]}]

PUT /registry/secrets/unique/influxdb

2

3

4

5

6

7

8

9

10

按照rules.yaml (opens new window)里的规则,会有以下资源会被同步过来:

所有CRD

命名空间unique

命名空间unit-test

命名空间unique下名为influxdb的secret资源

命名空间unit-test下名为influxdb1的configmap资源

2

3

4

5

在备K8s集群上查看验证,确实如此。